Source code for this article may be found here.

struc synaps

{

.inputs list ;Pointers to previous and next input synapses

;if such exist

.outputs list ;Pointers to previous and next output synapses

;if such exist

.value dq ? ;Value to be transmitted

.weight dq ? ;Weight of the synapse

.delta dq ? ;Weight delta

.signal dq ? ;Error signal

.input_index dw ? ;Index of the input neuron in the list of neurons

.output_index dw ? ;Index of the output neuron in the list of neurons

dd ? ;Alignment

.size = $ - .inputs

}

Note for nerds: The code shown in this article may be incomplete and may not contain all the security checks you would usually perform in your code as it is given here for demonstration purposes only. Downloadable source code may contain bugs (there is no software without bugs at all). It is provided as is without any warranty. You may use and redistribute it as you wish while mentioning this site and the author.

I was recently digging through my sources and came across a small ANN (artificial neural network) library I wrote several months ago in 64 bit Intel Assembly language (FASM syntax) and decided to share it with my respected readers hoping that it may be useful in some cases.

Artificial Neural Network

Internet is full of articles covering this topic either in general or in depth. Personally, I would prefer not to create yet another clone with pictures of Synapses, etc. In short ANN is a computational model inspired by the way our brain seems to work. There is a good article on Wikipedia giving quite a good amount of explanations. It seems to be important to mention, that saying "ANN" people usually think of Perceptron or Multilayer Perceptron, but there are much more types out there. You should check out this article if you are interested.

However, this article covers implementation of Multilayer Perceptron in Assembly language, which appears to be easier than it sounds. The library is appropriate for creation of multilayer perceptrons with any number of hidden layers, any number of input and output neurons, although, it is bound to 64 bit Linux, I will try to explain how you can change the code to make it compatible with 64 Windows, but it would take much more effort to actually rewrite the whole thing to run on 32 bit platforms.

Neuron

This is the basis of the whole project. Neuron is the main part of the calculation. In this example, all neurons are arranged into a linked list, having input neurons at the beginning of the list and output neurons at its end. It is important to mention that they would all be processed in the same order they appear in the linked list. First of all, let's define a structure, that would contain all the information we need for a single neuron:

struc list

{

.prev_ptr dq ?

.next_ptr dq ?

}

struc neuron

{

.list list ;Pointers to previous and next neurons

.input dq ? ;Pointer to the first input synapse

.output dq ? ;Pointer to the first output synapse

.value dq ? ;Resulting value of the neuron

.signal dq ? ;Error signal

.sum dq ? ;Sum of all weighted inputs

.bias dq ? ;Bias weight (threshold)

.bias_delta dq ? ;Bias weight delta

.index dw ? ;Index of the given neuron

.num_inputs dw ? ;Number of input synapses

.num_outputs dw ? ;Number of output synapses

.type dw ? ;Type of the neuron (bit field)

.size = $ - .list

}

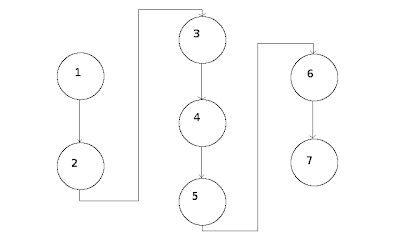

Figure 1 shows the arrangement of neurons in a perceptron designed to perform XOR operation. It has 2 input neurons, three neurons in the hidden layer and two output neurons. Arrows show the order of processing.

|

| Figure 1 |

I implemented this perceptron with 2 output neurons for testing purposes only, as it could well be implemented with a single output neuron, where output value > 0.5 would be 1 and below would be 0.

Synapse

There would be no perceptron without synaptic links. This is where the following structure appears on the scene.

{

.inputs list ;Pointers to previous and next input synapses

;if such exist

.outputs list ;Pointers to previous and next output synapses

;if such exist

.value dq ? ;Value to be transmitted

.weight dq ? ;Weight of the synapse

.delta dq ? ;Weight delta

.signal dq ? ;Error signal

.input_index dw ? ;Index of the input neuron in the list of neurons

.output_index dw ? ;Index of the output neuron in the list of neurons

dd ? ;Alignment

.size = $ - .inputs

}

At first, it may be a bit hard to understand why there are so many pointers in both structures. Unfortunately, my verbal abilities are far from being perfect (especially, given that English is not my mother tongue), therefore, let me illustrate the way neurons are interconnected with synapses in this implementation first (in a hope that my graphic abilities are not worse then verbal).

|

| Figure 2 |

Figure 2 shows that each neuron (except the output ones) has a pointer (neuron.output) to a list of synapses that need to be fed with this neuron's calculated value. For a neuron, its output synapses are linked with synaps.outputs pointers. In turn, each neuron (except the input ones) has a pointer (neuron.input) to a list of synapses to collect inputs from. On the figure, each gray arrow goes from a neuron in the left layer to a neuron in the right layer through the corresponding synaptic link.

Processing a Single Neuron

Each neuron in the network is processed with the same function which prototype in C is like this:

void neuron_process(neuron_t* n, int activation_type);

where n is a pointer to the neuron we want to process and activation_type specifies which activation function should be used. As I have mentioned above, this implementation only has one activation function - logistic (aka exponential):

f(x) = 1.0 / (1.0 + exp(-2 * x))

The following piece of code is an Assembly implementation of EXP():

;double exp(double d)

exp:

push rbp

mov rbp, esp

sub rsp, 8

push rbx rcx rdx rdi rsi

movsd qword [rbp-8], xmm0

fld qword [rbp-8]

fld2e

fmulp st1, st0

fld st0

frndint

fsub st1, st0

fxch st1

f2xm1

fld1

faddp st1, st0

fscale

fstp st1

fstp qword [rbp-8]

fwait

movsd xmm0, qword [rbp-8]

pop rsi rdi rdx rcx rbx

add rsp, 8

leave

ret

Now the x itself. x is a sum of products of value and weight of all input synaptic links plus bias weight of a neuron. The result of f() is then stored to each and every output synaptic link (if not output neuron) in accordance to the diagram shown on figure 3:

|

| Figure 3 |

.

The Net

We are almost done with building out net. Let's define a structure that would incorporate all the information about our perceptron and all values needed for training and execution:

struc net

{

.neurons dq ? ;Pointer to the first neuron in the linked list of neurons

.outs dq ? ;Pointer to the first output neuron

.num_neurons dd ? ;Total amount of neurons

.activation dd ? ;Which activation method to use (we only have one here)

.qerror dq ? ;Mean quadratic error

.num_inputs dw ? ;Number of input neurons

.num_outputs dw ? ;Number of output neurons

.rate dq ? ;Learning rate regulates learning speed

.momentum dq ? ;Roughly saying - error tolerance

.size = $ - .neurons

}

The Fun

The source code attached to this article implements all the functions needed to manipulate the network as needed. All functions are exported by the library and described in "ann.h". However, we only need to deal with few of them:

net_t* net_alloc(void);

This function allocates the net_t object and returns a pointer.

void net_fill(net_t* net, int number_of_neurons, int number_of_inputs, int number_of_outputs);

This function populates the net with requested amount of neurons and sets all values and pointers accordingly.

void net_set_links(net_t* net, int* links);

This function is responsible for setting up all the synaptic links between neurons. While net is a pointer to previously allocated net_t structure, links is a pointer to the array of integer pairs terminated by a pair of 0's:

int pairs[][2]={

{1, 3},

{1, 4},

{2, 4},

{2, 5},

{3, 6},

{3, 7},

{4, 6},

{4, 7},

{5, 6},

{5, 7},

{0, 0}};

The above array is exactly the one used in the supplied test application in order to set up links as shown on figure 3.

double net_train(net_t* net, double* values, double* targets);

This function is responsible for everything that is needed in order to train our perceptron using the back-propagation training paradigm. Returns mean quadratic error of all output neurons (which is also accessible through net->qerror).

values - array of values to be fed into the net prior to running it (the function does not check whether the length of the array is appropriate, so your code is responsible for that);

targets - array of expected results.

Due to the fact that we are using logistic activation function, it is necessary to normalize the input data to be in the range [0 < x < 1] (outputs would be in the same range as well).

Run this function as many times as needed in order to get a reasonable error. You will have to "play" with rate and momentum parameters to get best values, but you may start with 0.9 for rate and 0.02 for momentum. It is important to specify those values as the library does not check whether they are set or not!

void net_run(net_t* net, double* values);

This function is used in order to run the net.

values - same as in case of net_train function;

This function does not return a value, so you have to manually access net->outs.

Attached Source Code

The attached source code may be compiled with flat assembler:

fasm libann.asm libann.o

and linked to any C code compiled with GCC on 64 bit Linux.

Making the Code Windows Compatible

This requires a piece of work. First of all, you would have to edit the libann.asm file, changing the format ELF64 to format MS64 COFF and sections' attributes accordingly. You would also have to make some changes to the code. 64 bit Linux uses AMD64 ABI, while Microsoft has its own. Major differences are in how parameters are passed to functions. While in Linux they are passed via RDI, RSI, RDX, RCX, R8 and R9 (all the rest on the stack in reverse order) registers for integers and XMM0 - XMM7 for doubles, Microsoft uses RCX, RDX, R8 and R9 for integers and XMM0 - XMM3 for doubles and any additional parameters are passed on stack in reverse order.

Output

The output for XOR problem should look similar to this:

|

| Output |

Thanks for reading! Hope this article was interesting and may be even helpful.

See you at the next!

Pretty good read, I'll have to check out more about Perceptrons to really understand this, Thanks

ReplyDeleteAny other ANN than multilayer perceptrons are of no use to mainstream machine learning and are usually just used in attempts to model cognition (though cognitive science has done away with ANN's a while ago and mostly focus on Bayesian Networks as models of cognition.. see Tenenbaum for more info on that). But with typical ANN (FANN for example), it is much faster to treat the network as matrices and do fast matrix multiplication techniques rather than propagate signals.

ReplyDeleteSo my question, is it really work it to run it in this way?

Yes, you are definitely right with both statements.

DeleteAs to this specific implementation, the intentions were:

First, to make as close to the way perceptrons are usually explained

Second, to provide as much flexibility as possible in all that is related to perceptron's layout without scaring my readers (using Assembly and matrices in one sentence may already be scaring, no matter what you are trying to say ;-) )

Answering your question - yes, it works perfectly. Even with such problems as time series analysis.

Matlab users do it better :)

ReplyDeleteBruno, let's face the truth - 99% of time matlab does it better for matlab users :)

DeleteBesides, this post is not about what's better.

Hi, my name is Rina, I’m the editor in chief at iMasters, one of the largest developer communities in Brazil. I'd like to talk to you about republishing a translationg version of your articles. Can you please contact me (even for a no ;))? My email is rina.noronha@iimasters.com.br

ReplyDeleteThanks Alexey wonderfull writeup and thanks for sharing your idea presently am working “On Robust Automatic Offline Handwriting Verification Algorithm” and am thinking of using NN although i will be using C but this has given a good footing will ask more question where necessary.

ReplyDelete@ Bruno it is really not about what does it better(tools) but understanding the underlining principle, all the same nice observation.

Thanks! I am glad you find it useful.

DeleteBy the way, I would personally recommend this book http://www.amazon.com/Neural-Networks-Comprehensive-Foundation-Edition/dp/0132733501 for in-depth explanation on ANNs

Dear contributors,

ReplyDeleteI have read your contributions with great interest. While I have never worked with the MATLAB software, my programming and application development experience has been with NEURALWARE . Has anybody in this forum used this fine software ?

By the way thank you for the creative neural routine.

I am not aware of that product. But, honestly, their website sucks and a website is the face of the product.

DeleteBut, may be I am wrong.

How could I do to make me the results of for example from 0.0 to 5.0?

ReplyDeletehttp://www.amazon.com/Neural-Networks-Comprehensive-Foundation-Edition/dp/0132733501 for in-depth explanation on ANNs

Delete